Forward

Core Technologies Fueling Digital Transformations

Forward

No one would question the fact that the most catalyzing technology of the digital transformation happening today is Artificial Intelligence (AI) and its adjacent technologies. However, the most interesting aspect of AI is that, despite the amazing progresses achieved during the course of the last decade, its technologies are still in an infant stage in terms of practical adoption and economic impact, as reported by McKinsey analyses [1]. Thus, we can expect in the near future an enhanced technology penetration and visible industry transformations.

Indeed, AI was born in the mid1950s by pioneers like John McCarthy and Marvin Minsky, who built the first artificial neural network in 1968. However, it was only recently that we had most significant practical success and adoption thanks to the impressive progresses in hardware technology, software technology, algorithm capabilities, and the massive amounts of data generated by social media and the Internet in general. Indeed, more disruptive innovation in these areas will be needed in the future to continue fueling and driving the growth of the Digital Transformation.

Hardware Innovations

The hardware technologies enabling such transformations can be grouped in three key areas.

Computational Power is the engine necessary to deliver new disruptive software technologies, algorithms, and applications. The evolution of the Microprocessors architectures from CPU to GPU and AI Acc elerators and the innovations brought by CMOS foundries, with sub-ten nanometer CMOS technologies [2] and 3D Multichip Integration [3], were instrumental for the industry trajectory in general. Technological advances were able, despite physical challenges, to keep alive the prediction (“direction” would be a better term indeed) of Moore’s law, which expects the doubling of transistor density every two years with equivalent performance and cost improvements.

elerators and the innovations brought by CMOS foundries, with sub-ten nanometer CMOS technologies [2] and 3D Multichip Integration [3], were instrumental for the industry trajectory in general. Technological advances were able, despite physical challenges, to keep alive the prediction (“direction” would be a better term indeed) of Moore’s law, which expects the doubling of transistor density every two years with equivalent performance and cost improvements.

Hot Chip conference, held in Stanford this summer, was a great showcase of impressive processors innovations from both big players and start-ups [4].

Edge Computing is basically the application of AI to processors. It becomes predominant and will drive the chip technology market growth in the next 5 years, as it allows large amount of computation directly at the edge of the network, close to the source of data as part of the IOT device, avoiding going through cloud network and centralized data centers, adding latency limitations. Example of Edge Computing applications are Public Safety and Emergency response, Facial Recognition, Automotive, Robotics, and Drones.

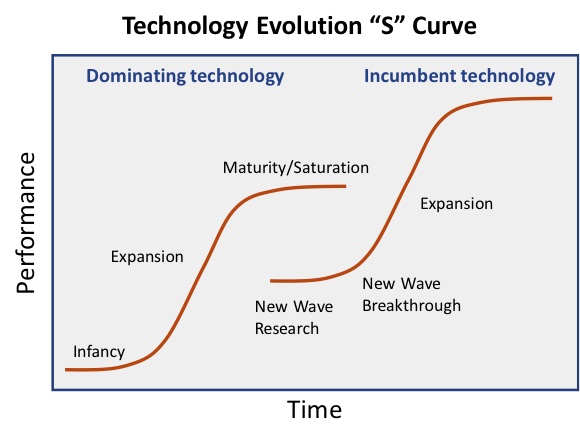

Furthermore, in order to continue to increase of the computational power and to match Moore’s requirements, disruptive technologies and new paradigms for computing capabilities and related memory to manage data overflow, will be needed before the innovative tricks of previous technologies will be completely exhausted and the well-known development “S” curve will meet its saturation.

Carbon Nanotubes Field Effect Transistors [5], Quantum Computing [6] and Neuromorphic Chips [7] showed at the horizon with impressive achievements.

Carbon Nanotubes Field Effect Transistors [5], Quantum Computing [6] and Neuromorphic Chips [7] showed at the horizon with impressive achievements.

Sensing technologies, embedded into the IOT ecosystem and network are the interface between human and machine world. Today, mature sensing technology is deployed in both consumer and industrial markets.

An iPhone has a large variety of sensors integrated, like – but not only – Proximity Sensor, Ambient Light Sensor, Accelerometer, Gyroscope, Compass, Touch ID, Face ID, Ultra-Wide-Band Radar as the latest introduction in iPhone 11.

In the industrial sector, sensors networks and IOT are revolutionizing production from both operational point of view and supply chain management. Sensors embedded in Wearable Devices and Smart Textilesor instruments for Augmented (AR) and Virtual Reality (VR) are to provide productivity improvement in manufacturing and other industries.

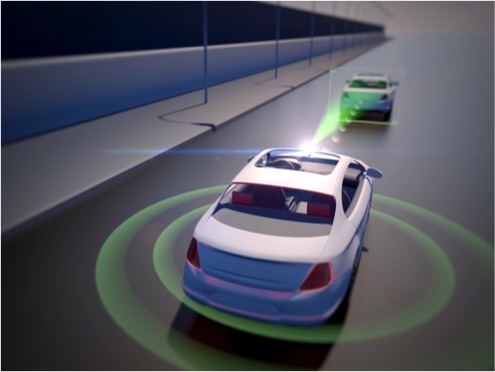

Motions Sensors, LIDAR (Light Detection and Ranging), Radar, Cameras and Sensor Fusion are the core elements and techniques to manage driving and safety for future Autonomous Vehicles (AV).

As for Computing, breakthroughs in sensing technology will be needed to overcome present challenges in particular in the field of AV. For example, in case of LIDAR technology, various start-ups are working on innovations to further reduce size, reduce vehicle speed limitations, improve range, resolution, environmental noise robustness and cost, among others. Innovations are also coming up as possible complete alternative to LIDAR with 3D Passive Optics [8] or Ultra-Wide-Band Subterranean Radar [9].

In the future smart cities, smart homes and smart factories, sensors technologies need to be interconnected with each other through an IOT network. This is not an easy task for commercial users, but progress in agnostic plug-and-play solutions will enable simpler and quicker commercial implementation and deployments. 20 billion connected devices are expected in 2020.

Advanced Connectivity is the backbone enabling the enormous flow of data to be transmitted and processed at ever growing speed with, ideally, zero latency, between human to human, human to machine, and machine to machine.

5G Network will strive towards these ideal targets delivering new technologies, architectures and standards to support a ubiquitous next generation communication infrastructure. Some of the 5G target specifications [10] and challenges respect to 4G Network are: 10 to 100 times more Gb/s, 1000x Bandwidth, 100% coverage, 90% reduction in network usage, support 100x connected IOT devices, achieve up to 1ms latency.

With these targets in mind, International Telecommunication Union (ITU) grouped 5G applications in 3 types:

- Enhanced Mobile Broadband (eMBB)

- Ultra-Reliable and Low-latency Communications (uRLLC)

- Massive Machine Type Communications (mMTC).

Some of the innovations making 5G possible rely on Massive MIMO (Multiple Inputs-Multiple Outputs) Antenna Arrays Technology, usage of larger frequency spectrum up to mmWave and related challenging Front-End Transceivers RFICs, advanced Orthogonal Frequency-Division Multiplexing (OFDM) with higher flexibility and scalability, Flexible Time Division Duplex (TDD) subframe design and advanced spectrum sharing techniques.

Some of the innovations making 5G possible rely on Massive MIMO (Multiple Inputs-Multiple Outputs) Antenna Arrays Technology, usage of larger frequency spectrum up to mmWave and related challenging Front-End Transceivers RFICs, advanced Orthogonal Frequency-Division Multiplexing (OFDM) with higher flexibility and scalability, Flexible Time Division Duplex (TDD) subframe design and advanced spectrum sharing techniques.

The enhanced performance, programmability and flexibility of 5G will have a direct impact on a lot of industries and applications, and will catalyze new business models and opportunities. First deployment of so called 4.5G and 5G happened in the past year in several countries, while more extensive take off is expected during 2020. T-Mobile, in collaboration with Qualcomm and Ericsson announced few months ago the achievement of first 5G data phone call in 600MHz channel bandwidth [11].

R&D teams of large Telecom companies are already investigating solutions for future 6G.

Complementary building blocks to wireless 5G Network are Low Earth Orbit Satellite (LEO) and Fiber Optics interconnection.

The advance in the technology of small and lighter satellites and the consequent reduction of launching cost stimulated the development and first deployments of Low-Orbit Satellites Networks able to deliver broadband mmWave up-down links interconnection everywhere in the world. Eutelsat, SpaceX, OneWeb are some of the players in this area.

Fiber Optics infrastructure deployed extremely large bandwidth for years thanks to the nature of the fiber itself. Every three years we saw doubling of bandwidth capability deployed in long haul, metro, and datacenters networks. The 4th coherent generation 400G enhanced by 7nm CMOS technology is expected to be deployed in production in 2020.

Advances in Silicon Photonics are improving further the level of integration to allow larger multiplexing capabilities with consequent more bandwidth and lower costs.

5G needs fiber optics within its network, in particular at the Fronthaul which connects the C-RAN (Centralized Radio Access Network) to radio heads of remote cell sites.

Software Innovations

The software technologies that enabled and fuels the digital transformation range from programming languages, computing and data storage/retrieval paradigms and algorithms, communications protocols, to security and cryptography. Let’s take a look at the advances of the software technologies in 3 broad areas that characterize the evolution since the dawn of the world-wide web in the early ‘90s.

The setting of the foundation of today’s Cloud Services/Computing represents the childhood acquisition of the basics. Today’s burgeoning adoption of the Machine Learning (ML) and AI represents the formative teenage and early adulthood where the knowledge accrual builds into intelligence and reasoning. The development and exploration of the Blockchain technologies, now underway, represents the prime of the adulthood that leverages the knowledge and intelligence to business and social ecosystems.

Cloud Computing evolved out of the need to provide sub-second Internet search by Google, and support large scale web portals by Yahoo! and ecommerce by Amazon. The traditional server clustering for enterprise client-server applications was simply unable to scale up to meet the rapid pace of web applications expansion. Dynamically scalable (i.e. scale-out) computing architectures based on commodity inexpensive servers and high-speed networking equipment that supports fault-tolerant, non-stop web-based services was critical to these web pioneers.

Multitude of horizontally scalable computing technologies were developed and evolved to meet unquenchable thirst of the web data and services expansion. These provided the ability to manage the provisioning, dynamic assignment of computing resources and startup/shutdown of applications on tens of thousands of physical barebone commodity servers and millions of virtual servers, all housed in warehouse sized Internet Data Centers (IDC).

Now, applications no longer need to be bound to a physical server. Web service providers are able to run the same application on thousands of “servers” simultaneously, serving millions of users across wide geographies, and scaling up and down the web services dynamically based on user traffic. The terms of “cloud computing” and “cloud services” were born, as the expanse and the “shape” of the computing resources and web service change constantly and quickly, just like the cloud.

The effectiveness and strength of Cloud Computing depends on the convergence of three different fields, as described below.

The early clustered web and relational database servers with structured query language interfaces (SQL), front-ended by server load balancers (SLB), evolved to a slew of scale-out technologies, including the Big Data processing , NoSQL databases [12], cloud storage technologies [13, 14], datacenter management tools, Infrastructure-as-a-Service (IaaS) management and Development-to-operations (DevOps) tools just to mention some of them. These technologies allow the processing of massive amounts of data, such as Google searching engine’s indexing of all crawlable web content and delivering results to millions of user search queries every second, or the tens of millions of online electronic commerce transactions, logistic tracking and everyday order deliveries.

All of these cloud-based online services carry on, while software patches and updates are implemented through agile development and deployed in the background through the streamlined DevOps process, transparent to the users of these cloud services. Similarly, infrastructure as a service (IaaS) and platform-as-a-service (PaaS), such as Amazon Web Services (AWS) and Microsoft Azure, enable many enterprises, large and small, to employ computing, data storage, and network resources at the computing infrastructure level on a pay-per-use basis.

The scaling out of the cloud is moving beyond virtual machines (VM) to containers for hosting lightweight microservices that can be combined quickly and dynamically to form new cloud services. Also, as part of enabling the edge computing, the serverless architecture employing lambda functions, which allow fine-grained computation load distribution and balancing, is starting to gain traction.

Network Technologies to take advantage of and enhance the growing hybrid cloud constructions, the expanding IOT networks, and the incoming 5G cellular networks, include the Virtual Networks, the Software Defined Networking (SDN), the Network Virtualized Functions (NFV) [15] and the Open Network Automation Platform (ONAP), to mention a few.

These technologies enable the fine-grained partitioning and allocation of network resources and bandwidth, integration of sophisticated packet processing and data transformation functions within the network fabric, and real-time, policy-driven orchestration and automation of the physical and the virtual network functions. All of these will enable the communications and cloud services providers to rapidly automate and deploy new services, while supporting complete lifecycle management.

Cybersecurity Technologies are critical to protect the cloud services and the vast amount of users and devices, across the ever-expanding attack surfaces. These technologies include Identify Verification, such as two- and multi-factor authentication, data privacy protection and Loss Prevention, such as Encryption and Tokenization, Network Infrastructure Protections, such as Firewall, Intrusion Detection and Prevention and others.

Cybersecurity is very much a cat-and-mouse game. Security is never going to be 100% and Zero Day (i.e. software vulnerability that is unknown to, or unaddressed by, those who should be interested in mitigating the vulnerability) exploits will continue. AI is increasingly being applied to counter the sophisticated cyber-attacks, such as Context-Aware Behavioral Analytics for threat identification [16].

While 256-bit public key cryptography is the standard practice today, quantum cryptography is being developed to ensure there will be an advanced cryptographic capability to protect the network communications, despite the computing advances that are expected to render the current public key cryptography ineffective.

Artificial Intelligence trace their roots to the early days of digital computing. John McCarthy first coined the term Artificial Intelligence in 1956. During the Golden Years that ensured, the early AI pioneers, including Marvin Minsky, Seymour Papert, Joseph Weizenbaum, Terry Winograd, etc., ushered in the dawn of symbolic logic, natural language process, machine vision, robotics, and artificial neural network [17].

In between the “AI winters” in the late 1970s and late 1980s through the mid-1990s, the expert systems and knowledge-based systems were the major focus of the AI research, along with the first significant progress in the artificial neural networks (ANN) by Geoffrey Hinton’s backpropagation.

Symbolic programming, natural language processing (in particular the speech recognition), and machine vision made steady progress through the first decade of the 21st century. However, the advent of the deep neural networks (DNN), first shown by Alex Krizhevsky in winning the 2012 ImageNet Large Scale Visual Recognition Challenge by a wide margin with deep convolutional neural network (CNN) based Alexnet, broke the flood gate open.

Symbolic programming, natural language processing (in particular the speech recognition), and machine vision made steady progress through the first decade of the 21st century. However, the advent of the deep neural networks (DNN), first shown by Alex Krizhevsky in winning the 2012 ImageNet Large Scale Visual Recognition Challenge by a wide margin with deep convolutional neural network (CNN) based Alexnet, broke the flood gate open.

Various deep neural networks (DNN) quickly followed, including supervised and semi-supervised residual network (ResNet) [18], the long- and short-term memory (LSTM) recurrent neural network (RNN) [19], the unsupervised variational autoencoder (VAE) [20] and the generated adversarial network (GAN). These form the field of deep learning (DL), which, in turn, are helping to push the progress in reinforcement learning (RL) and transfer learning.

Through changing the software programming paradigm from explicit logics to that of classification, inference and prediction, DL is also driving the advances in a multitude of technologies and applications, such as object detection, tracking, and recognition (including facial recognition) in real-time and recorded images and video, speech recognition and language translations for virtual personal assistance, as well as detection of and protection from cyber security attacks, whether spam and malware, trojan horse digital data theft, or the hot topic of cyber warfare between nation states.

Similarly, DL is helping to accelerate advances in other industries, including autonomous vehicles and drones for transportation and logistics, DNA sequencing and genetic engineering in biotech, and protein designs and drug discovery in pharmaceutical industry. In effect, AI is finding entry into just about any place where data could be amassed and knowledge extracted.

Even with all the tremendous advances, the true Turing Test is yet to be passed. With further advances in sparse learning, transfer learning, unsupervised learning and logic reasoning, more applications will be opened up, in advertisement, agriculture, education, healthcare, legal, manufacturing, environment and natural resources management, deep-space and deep-sea explorations, etc. While most of the AI researchers don’t expect the artificial general intelligence (AGI) to become a reality before the middle of the century, most things that humanity wants to automate will see some level of AI infusion. It is both an exciting and a bit scary to envision the future!

Blockchain was first developed for a world that’s fast losing the trusts in the traditional financial services ecosystem following the 2008 market crash. The basic form of blockchain comprises a time-stamped series of immutable data record (i.e. a chain of data blocks) of

requested and completed transactions, which are disseminated in a peer-to-peer (P2P) network using the gossipy blockchain protocol. As a new block is verified by all the voluntarily participating miners via a consensus protocol, the block is added to the associated blockchain by the nodes in the network holding a copy of the blockchain. The collection of blockchains thus form the distributed, shared, digital ledger.

From the Blockchain technologies came the first digital cryptocurrency, BitCoin, followed by a number other cryptocurrencies, many based on the popular Ethereum Ether, and the recently announced stablecoinLibra that is being promoted by Facebook and the Libra Association members.

The primary adoption challenge has been the simple fact that these cryptocurrencies were originally intended to be an alternative to any country’s legal currency, and thus not subject to the existing policies, regulations, and the financial systems. As such, they aren’t backed by any real currency, nor have there been a widely accepted mechanism for exchanging the cryptocurrencies with the real currencies. What the public has witnessed of BitCoin is a widely fluctuating and speculative financial asset, more like an equity than a currency.

Technologically, with only a short 11 years of history, the blockchain technologies are still very much in its infancy. The fully decentralized P2P network design of the original blockchain was “heavyweight”, both the proof-of-work computations and communications wise, as the blockchains grow in length.

Besides the cryptocurrency application that has been in the limelight, which represents monetary instruments, there is a wide range of other potential applications, from tracking pharmaceutical supply chain, digital ownership records of physical and digital assets, privacy protection of personal and medical records, to legal contractual instruments and more being defined as time progresses. Just as with the expansion of web and cloud-based services, the blockchain technologies are being viewed increasingly as the second Internet revolution.

The broad range of applications that are being, and will continue to be, explored, place new requirements on what the technologies need to support. The open source Ethereum project introduced the Smart Contract technology on top of the blockchain. To addressing the transaction performance bottleneck, the proof-of-authority (PoA) [21], proof-of-stake (PoS) [22] and proof-of-importance (PoI) [23] have been developed.

A major blockchain development is underway to enable the expansion of ecosystem and support interoperability among multiple blockchain networks and token exchange. This is akin to the physical currency exchanges. The first adoption of this appears to be in the gaming industry.

Another major blockchain development is focusing on some of the cyber security needs, including privacy protection on any digital asset and transactions, as well on the personal identity verification and protection. As the application of these technologies and products are often intertwined with existing cyber security mechanisms, the adoption cycle is expected to be more protracted, with multiple iterations that will incorporate practical learnings.

References

[1] “Note from the AI frontier: Application and value of deep learning”, McKinsey Global Institute, April 2018

[2] “A 7nm CMOS Technology Platform for Mobile and High-Performance Compute Application”, S. Narasimha et al, Global Foundry, IEDM17-689, 2017

[3] “Industry Giants TSMC and Intel Vow to Focus on 3D IC Packaging”, Emmy Yi, Semi.org, June 2019

[4] “Cerebras Unveils AI Supercomputer-On-A-Chip”, Karl Freund, Forbes, Aug. 2019

[5] “Will Carbon Nanotube Memory Replace DRAM?” Bill Gervasi, Nantero, IEEE Computer Society, April 2019

[6] “Quantum Supremacy Using a Programmable Superconducting Processor”, John Martinis, Sergio Boixo, Google AI Blog, Oct. 2019

[7] “Beyond Today AI”, Intel

[8] “Silicon Valley startup that makes 16 lens camera gets $121M from Softbank”, Business Insider, July 2018

[9] “For safer localization of autonomous vehicles, WaveSense looks below the road”, Carla Lauter, Spar3D, July 2019

[10] “IEEE 5G and Beyond Technology Roadmap White Paper”, IEEE

[11] “One Step Closer to Nationwide 5G: T-Mobile, Qualcomm and Ericsson take Massive Step Toward Delivering Broad 5G on Low-Band Spectrum”, T-Mobile, July 2019

[12] “NoSQL Tutorial: Learn NoSQL Features, Types, What is, Advantages”, Guru99.com, Nov. 2019

[13] “Cloud storage: What is it and how does it work?”, How It Works, April 2019

[14] “Data storage: Everything you need to know about emerging technologies”, Robin Harris, ZD Net, May 2019

[15] “What is NFV and what are its benefits”, Lee Doyle, Network World, Feb. 2018

“Network Functions Virtualization Guide plus NFV Vs SDN”, Tim Keary, comparitech.com, Nov. 2018

[16] “Detecting advanced threats with user behavior analytics”, Saryu Nayyar, Network World, March 2015

[17] “What is an artificial neural network? Here’s everything you need to know”, Lee Dormehl, Digital Trends, Jan. 2019

“What Are Artificial Neural Networks – A Simple Explanation For Absolutely Anyone”, Bernard Marr, Setp. 2018

[18] “Introduction to ResNets”, Connor Shorten, Towards Data Science, Jan.

[19] “Understanding RNN and LSTM”, Aditi Mittal, Towards Data Science, Oct.

“A Beginner’s Guide to LSTMs and Recurrent Neural Networks”, Chris Nicholson, Skymind.ai

[20] “Intuitively Understanding Variational Autoencoders”, Irhum Shafkat, Towards Data Science, Feb. 2018

“Understanding Variational Autoencoders (VAEs)”, Joseph Rocca, Towards Data Science, Sept.

[21] “What is Proof of Authority Consensus? Staking Your Identity on The Blockchain”, Brian Curran, Blockonomi, July 2018

[22] “What is Proof of Stake? (PoS)”, Max Thake, Medium, July 2018

[23] “Proof of Importance Explained”, Bisade Asolo, MyCryptopeida, Nov. 2018